Integrations via Apache Kafka for LARGE business. What are the benefits and limitations?

And what solutions can be implemented together with Kafka to relieve all the headaches about integrations?

Indeed, large businesses have many problems in the layer of integrations between IT systems that tools such as Apache Kafka or other message brokers can solve.

In this article, we'll figure out in what configuration, using these tools, you can solve problems and what levels of problems go away accordingly.

The IT circuit of any big business is like a huge metropolis. Like cities, there are no similar ones here, but cities always have similar elements and problems. Just like cities are a combination of systems, approaches, configurations, costs of services, infrastructures, etc., so does IT: one city has a good subway but a lot of traffic jams, another has little crime, but shops are closed, siesta, etc.

I would like an ideal city where shops are open 24/7, there is no crime, and you can get to anywhere without traffic jams — not only to people, but also to goods and services.

It's the same with integrations between IT systems: one system has been completed to such a state that it produces very high-quality data, but the problem is that there are ghettos in the form of other systems or their components, where everything is unstable: constant errors, data is duplicated with each other in this system and neighboring ones, and as a result, all this traffic creates traffic jams on roads and highways. People do not make it to important meetings, goods are not delivered on time or are not delivered at all, etc. etc.

When a global cataclysm happens and the whole city gets stuck in traffic, you need a superhero who can take the situation into mighty hands and sort out all this chaos... until next time.

And so our superhero today is the Apache Kafka message broker.

{{cta}}

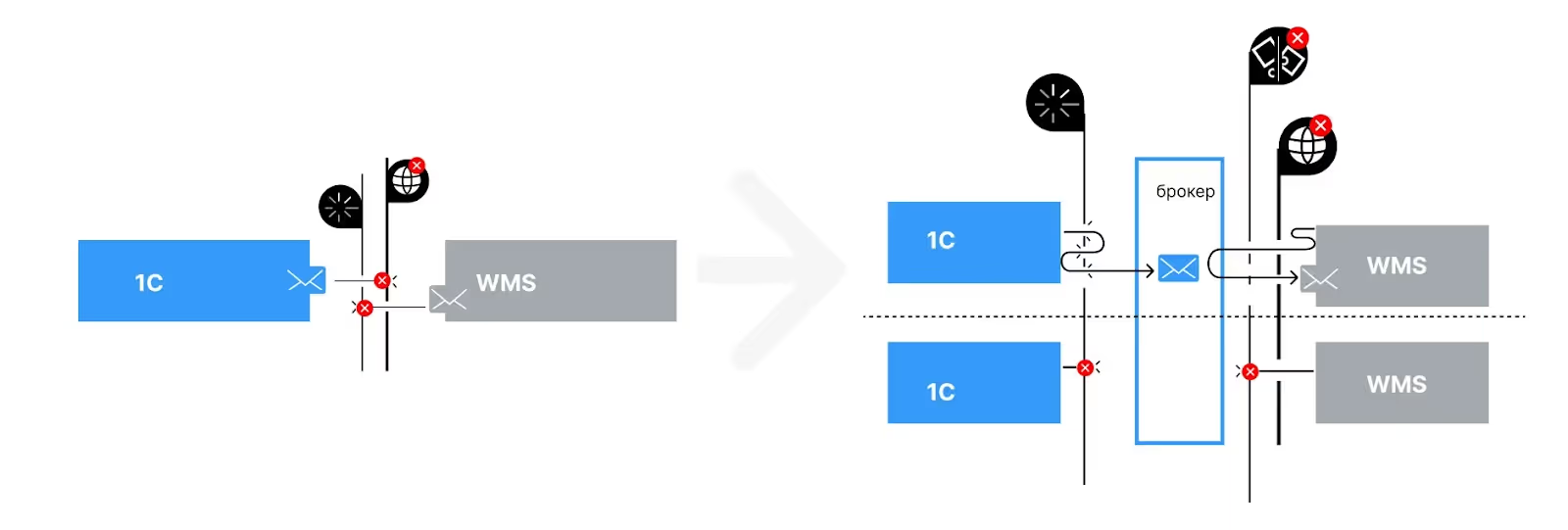

Using one of these solutions as an example, let us consider a typical situation. The business has the above problems, and the decision is being made to integrate through a message broker like Kafka.

At the profit level, this looks like a completely rational solution.

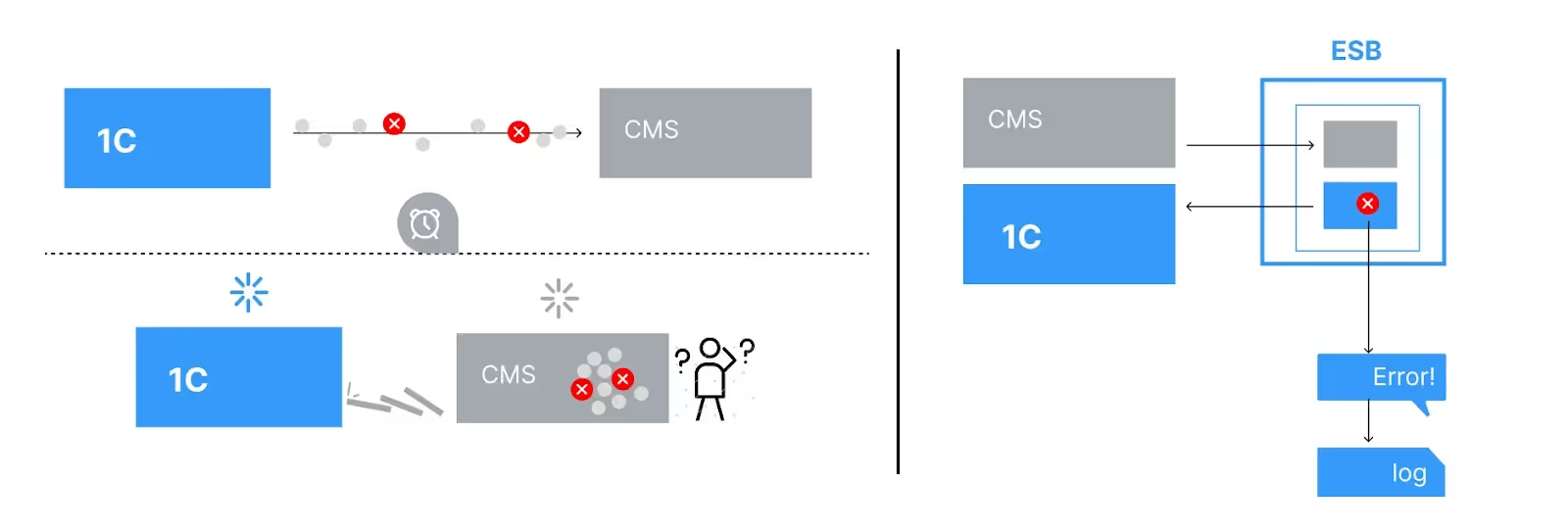

Yes, indeed, a message broker helps reduce the peak load on systems and reduce data loss. The systems will not directly control the delivery and receipt of messages.

The introduction of a message broker partially reduces data loss but does not exclude it in some scenarios! A broker is a vehicle but not a brain — the whole logic of control and receipt lies within connected systems.

But integrations only through a broker do not GUARANTEE transfer if:

- if the recipient is not ready, the format does not match, the logic has not been worked out, the storage time has expired, or there is simply no confirmation of acceptance

I would also like to note that the broker has nothing to do with analyzing the causes of errors or the causes of overloads during the peak days of Black Friday, whether you have a broker or not - often such errors or overloads come and their reason is not understood.

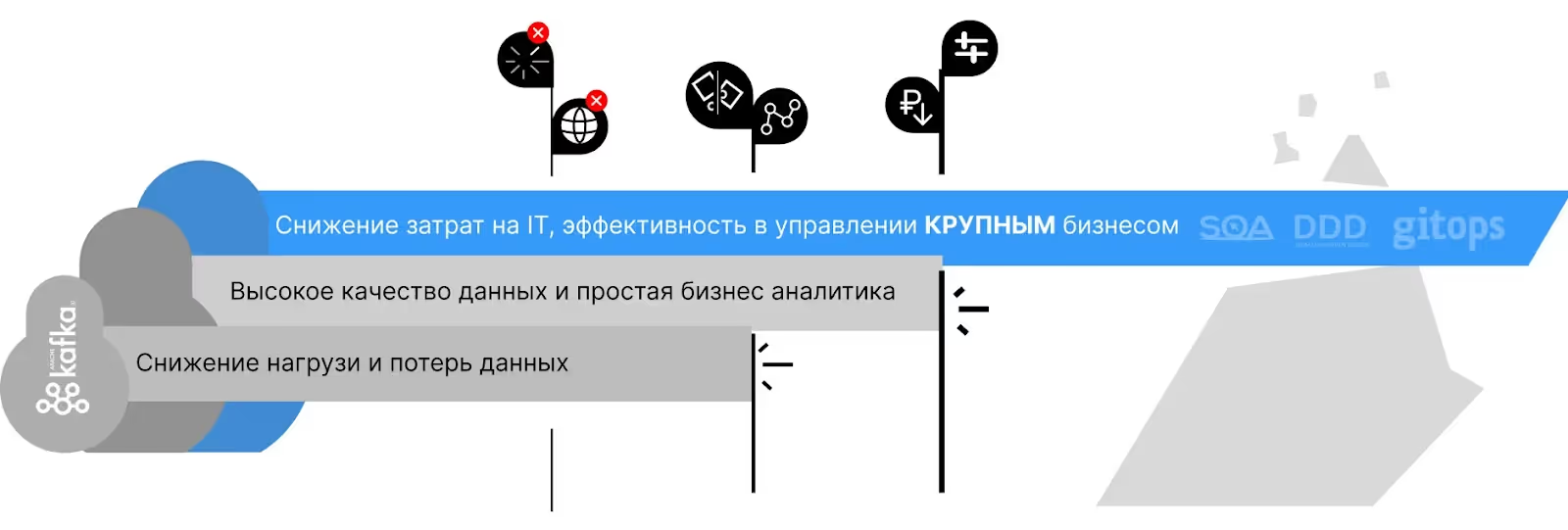

What solutions can be implemented together with Kafka to relieve all the headaches about integrations?

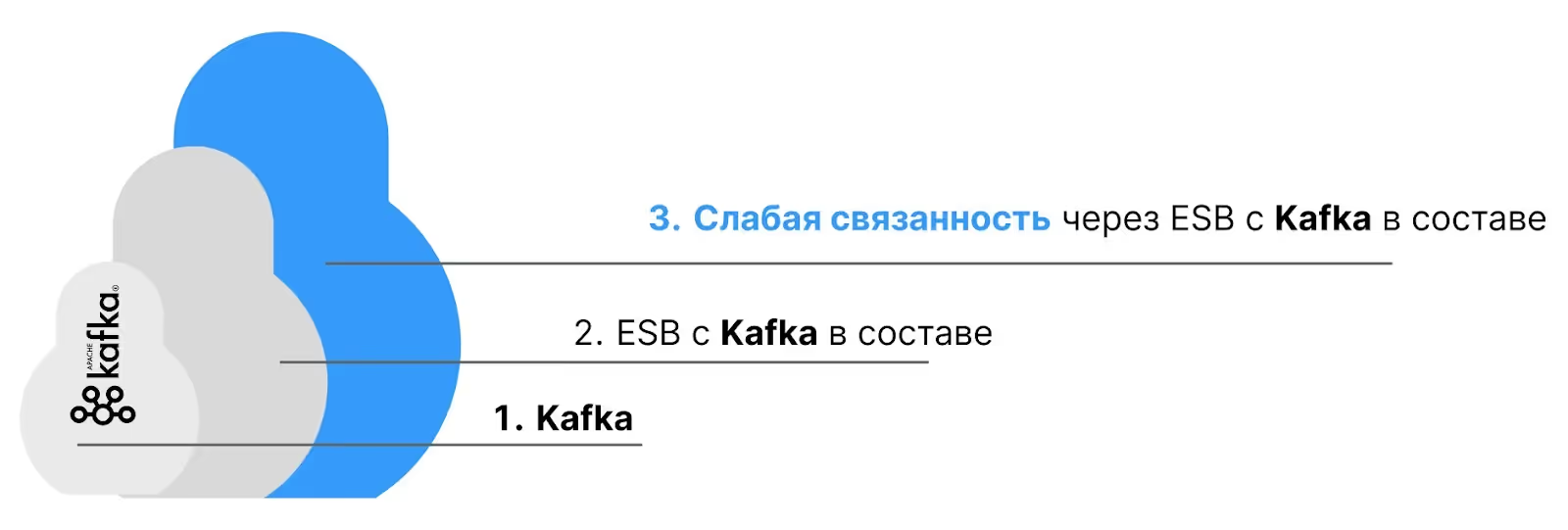

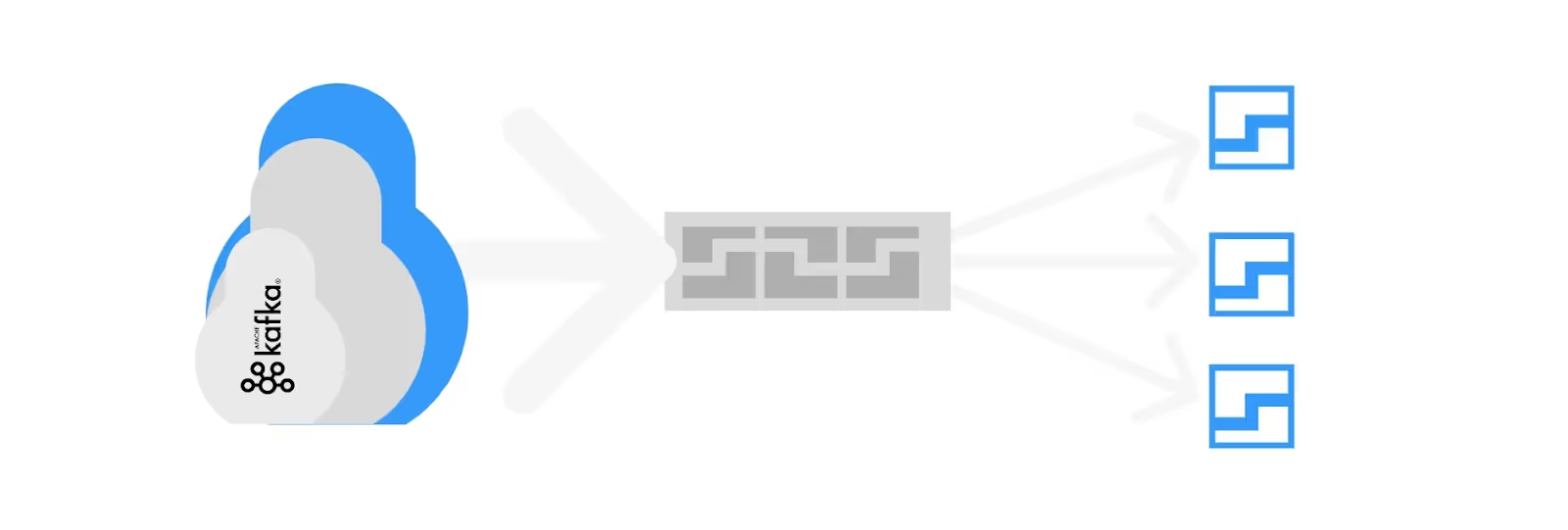

- Kafka

- The peak load on systems is reduced

- Reduces data loss

- Asynchronous — systems don't have to be online all the time to communicate

- ESB c Kafka as part of

- data loss is avoided

- the load on the systems is significantly reduced

- business intelligence (BI) is facilitated

- Weak connectivity via ESB c Kafka as part of

- you can change systems at a click

- IT costs are significantly reduced

- Alienation is easy to transfer to another IT team

- High data quality = simple business intelligence

2. ESB c Kafka as part of

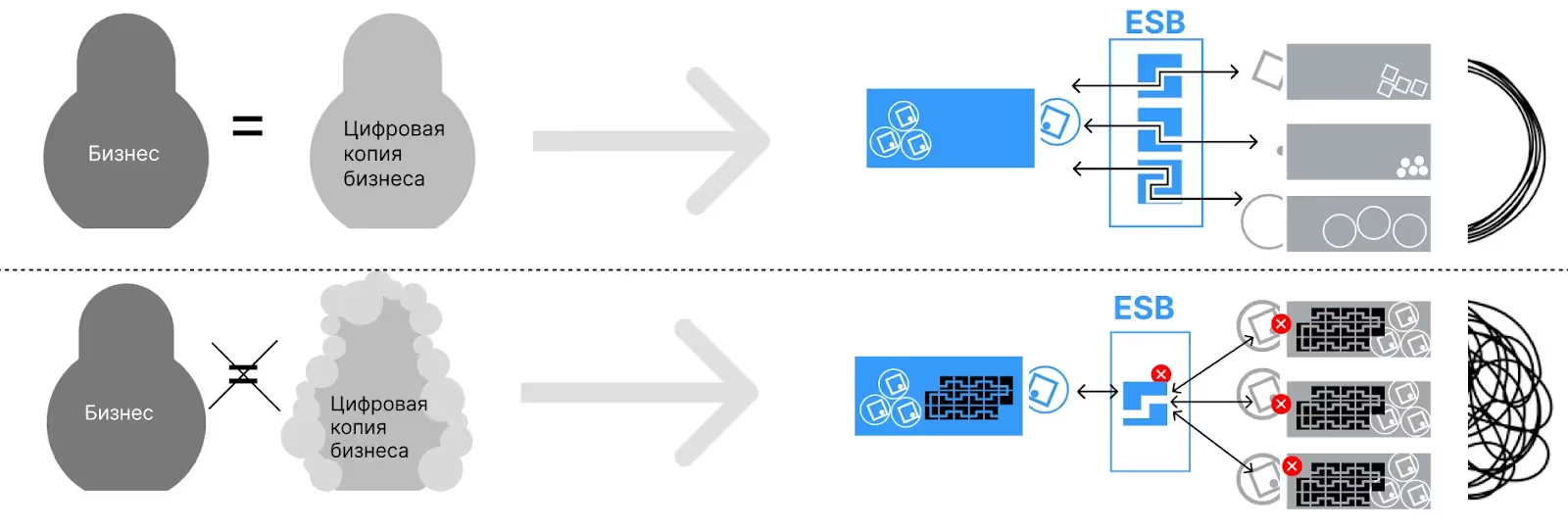

To obtain the above benefits, it is important to understand that every doll cannot exist without the others

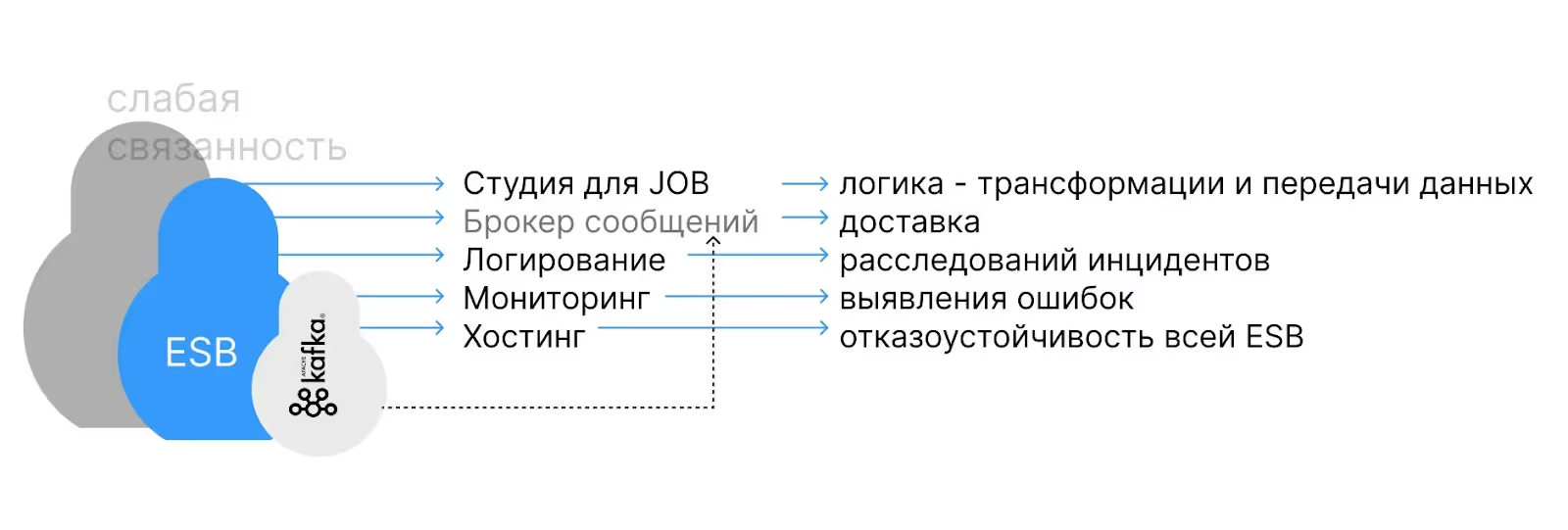

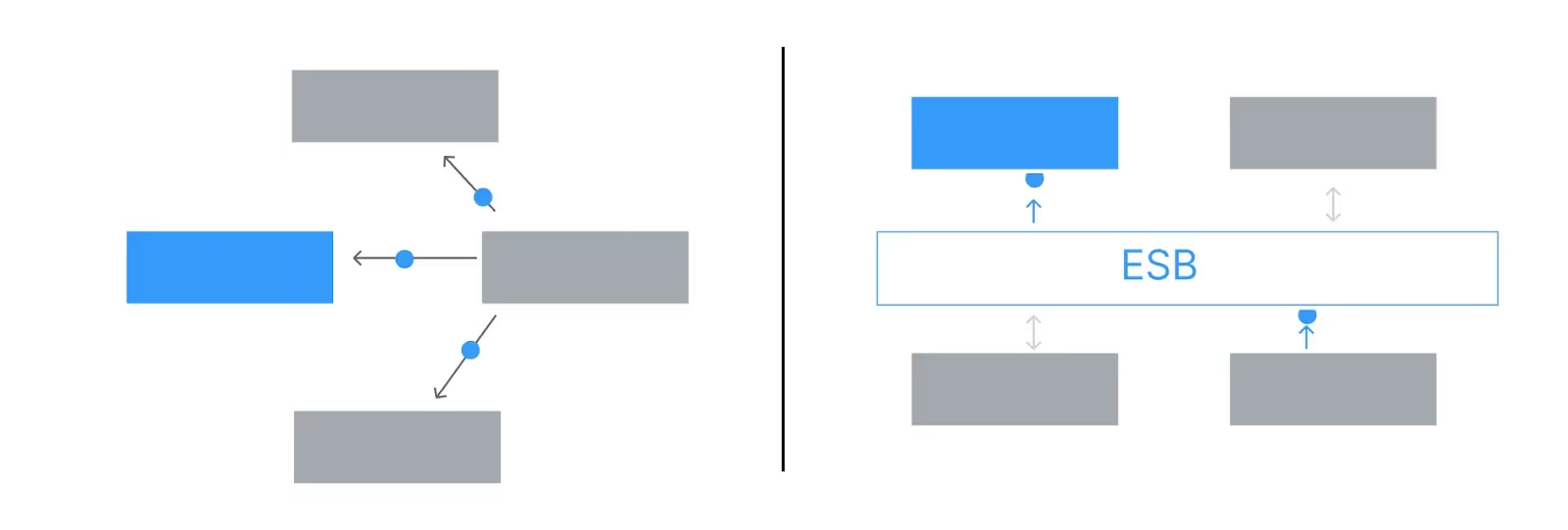

What is ESB?

ESB is a corporate service bus. Having a message broker is often confused with it. A broker is a transport tire. In the case of ESB, it is a set of components that create an ideal layer of integrations. Which provides: transformation logic, routing, error detection, incident investigation, and all this is stored on a dedicated hosting that will ensure resiliency.

Essentially, ESB is a translator who conveys the essence very efficiently without losing meaning and taking into account the cultural context. Systems are “citizens” of different countries. At the same time, ESB can even communicate with a tourist bus, and everyone will speak their own language — with only one interpreter who “transmits” all messages, and the bus will have an atmosphere of complete mutual understanding. Moreover, the translator always remembers everything they said and what was meant: any conflict situations or misunderstandings can always be sorted out by “pulling out” the necessary dialogues from the translator's memory.

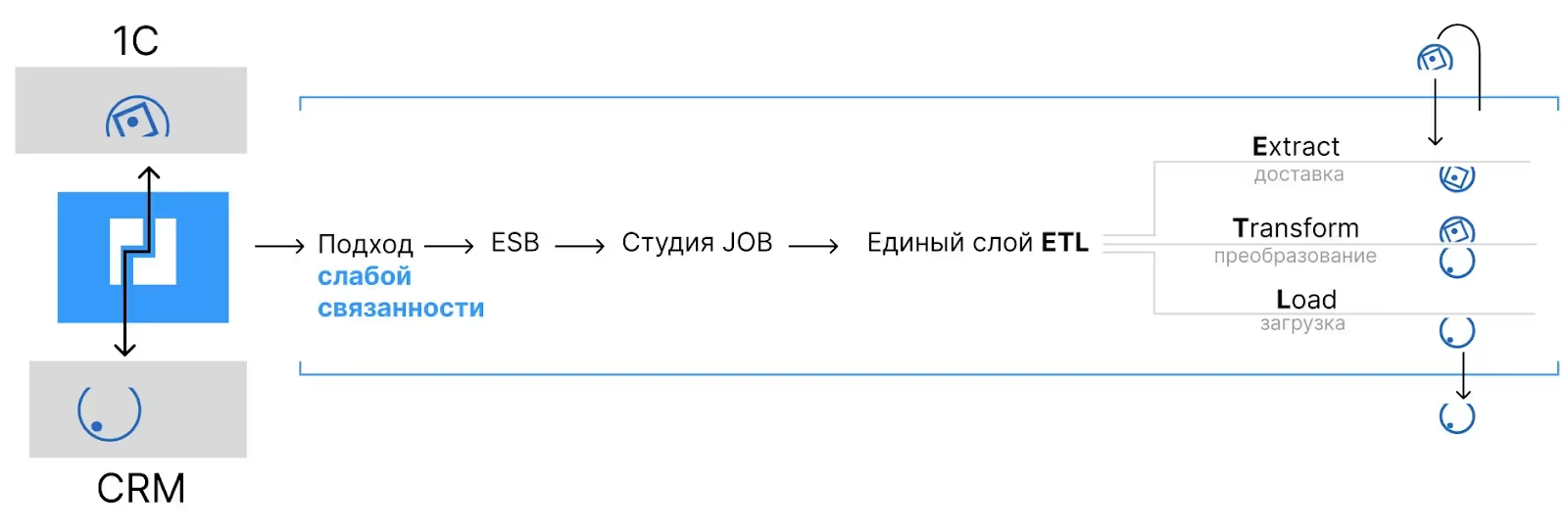

High data quality is a natural consequence of the weak connectivity built through ESB. It is provided through strict transformational logic and clear protocol interaction between systems.

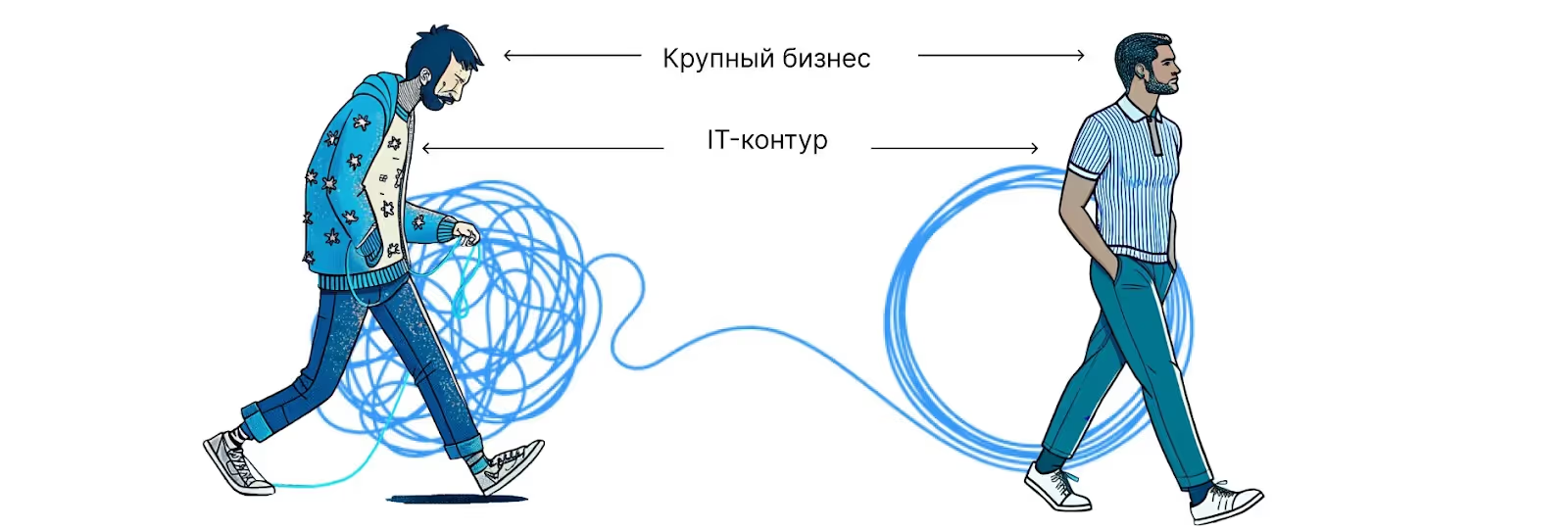

Here, the systems are unaware of each other's existence—their task is to receive and transmit data. The entire logic of transfer and transformation is not in systems, but in ETL, and is clearly divided architecturally and organizationally: the tangle of logical connections has been untangled, unnecessary has been removed, and the “threads” that remain lie exactly where they are needed, without affecting the rest. Here you can easily change one system to another or connect and put into operation a new one — without superheroes with titanic efforts and resources.

IT is starting to work for business, not the other way around!

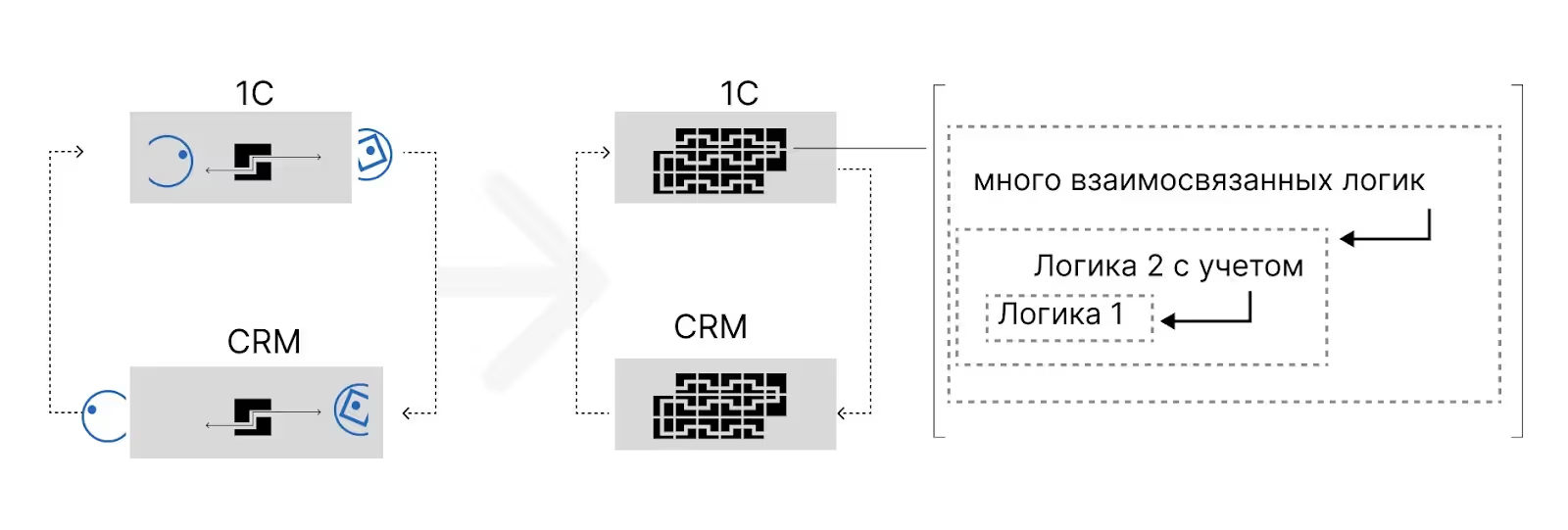

How does the weak connectivity approach differ from direct integrations or through a broker? And why data is becoming poor:

It is possible to exchange data directly and transform it within systems.

It is important to take into account the logic of transformation: if something changes in one system, you need to “adapt” to another. At the beginning, it looks effective: we quickly connected via the API — data flew in, beauty!

But without a single integration layer and common standards, without an approach of weak connectivity, this begins to fall apart over time. A typical picture looks like this:

- Many IT systems

- Each has its own logic of delivery and transformation

- Systems can be a source of dozens or even hundreds of entities with low-quality data, which leads to new errors and even more low-quality entities

- Tools are being introduced that should “clean” data — MDM, PIM. But they suppress the consequences without eliminating the causes

As a result, one system should take into account the logic of transformation and connections between everyone else.

This is a strong link: a tangle of dependencies that is difficult to understand even within a team, not to mention external participants. There is a “closed infrastructure” effect, where any refinement is a mini-project.

So simply implementing a message broker like Kafka or even ESB without a loosely connected architecture is like drinking citramone out of a wild hangover. It will feel a little better, but the root of the problem will remain untouched.

{{cta}}

ESB is high-quality data and easy business intelligence (BI)!

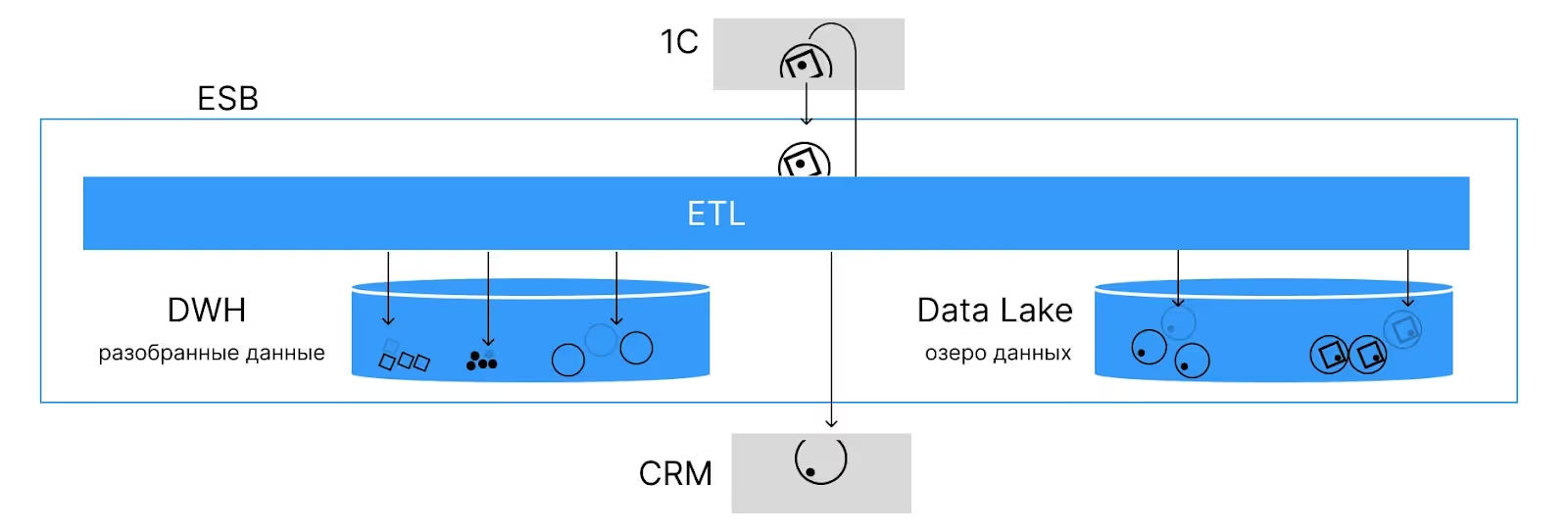

For two systems, the entity of the product card type may be a different set of data. Through ESB, it is easy to build a data transformation adapted to each system. Which will also reduce the load. And it will eliminate transmission errors due to incorrect data format.

The data is stored in disassembled form in DWH and in its original form in Data Lake. Everything here is transparent and arranged on shelves. Business intelligence (BI) system - can easily collect any required report. And the system itself (BI) is easy to connect through a single ESB integration layer.

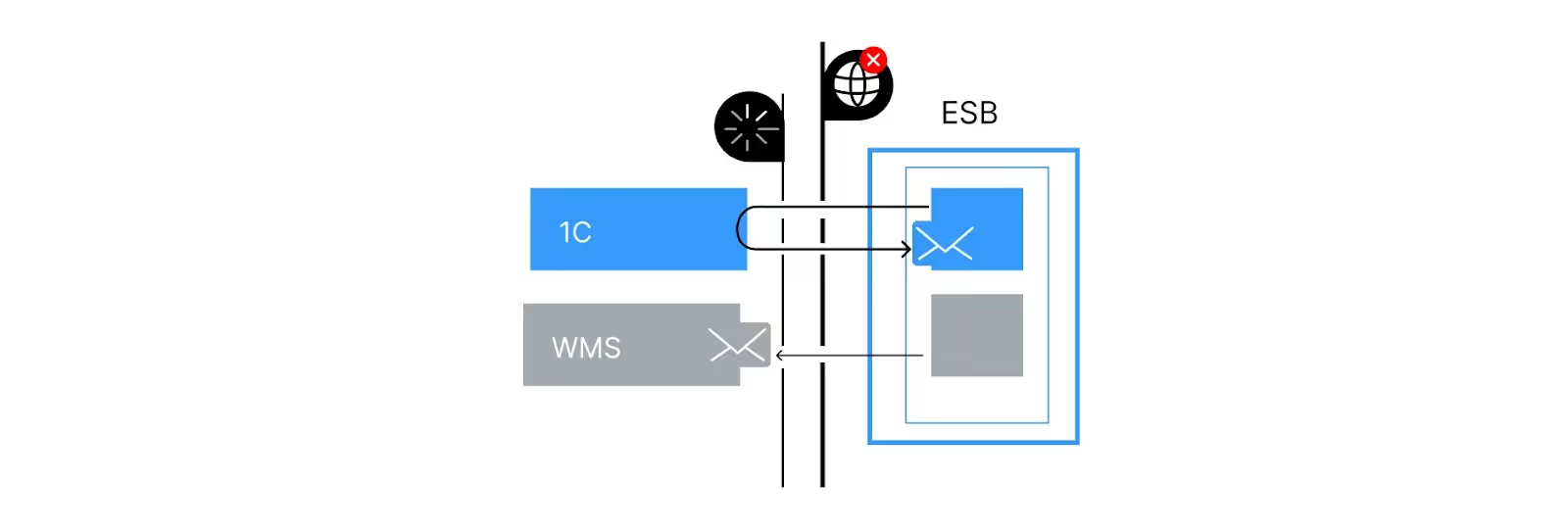

ESB is a guarantee of message delivery

The ESB itself controls the availability of the network and systems. It picks up and ensures the delivery of data by itself. It converts it into the right format for each system. Here, the single ETL layer is the brain, and Kafka or another broker ensures delivery and receipt.

ESB is the identification of errors when they occur

In the ESB approach, proactive monitoring allows you to immediately receive a notification when an error occurs, quickly view the log and quickly fix the cause. In contrast to direct integrations, where problems are often discovered only by indirect signs — when systems start to fail one after another like dominoes, or, even worse, when customers who have lost orders report this.

ESB - system load is significantly reduced

ESB reduces the load on systems because it not only transfers, buffers and converts data, but also routing and orchestrating streams. Instead of one system asking the other directly (and “hanging” around waiting for an answer), ESB takes the data, temporarily stores it, transforms it into the right format, and transmits it when the receiving end is ready.

Moreover, ESB knows where, when, and in what order to transfer data — thanks to built-in routing. And if the data flow must go through several stages (for example, validation, enrich, logging, writing to storage), ESB orchestration manages this sequence without having to write it down in each system separately.

As a result, systems become less dependent on each other and operate at their own pace — without overloads, timeouts and manual control. The architecture is becoming more flexible, and processes are becoming more stable and scalable.

3. Weak connectivity via ESB c Kafka as part of

Why would using ESB in general or Kafka in particular without weak connectivity not give the desired result?

All of the above is good, but to really build an IT circuit where you can change systems “at a click”, significantly reduce IT costs and easily transfer support to another team, you need poor connectivity. Only such an architecture will provide simple, accurate, and affordable business intelligence. This can be achieved by building weak connectivity at both the architectural and organizational levels — through a well-thought-out corporate service bus (ESB) that can use Kafka as a vehicle.

It is inefficient to simply implement “solutions” at the IT level here, because the digital copy of the business is increasingly at odds with reality. There is a gap between how real processes work and what is reflected in IT.

You can endlessly search for contractors, introduce new tools and trendy approaches. But as long as there is no identity between real and digital processes, all this will lead to errors and inconsistencies. The solutions will fall on top of these distortions, forming scar tissue with previous “solutions”. And this digital erosion will only increase: the digital copy of the business will become less and less relevant to reality.

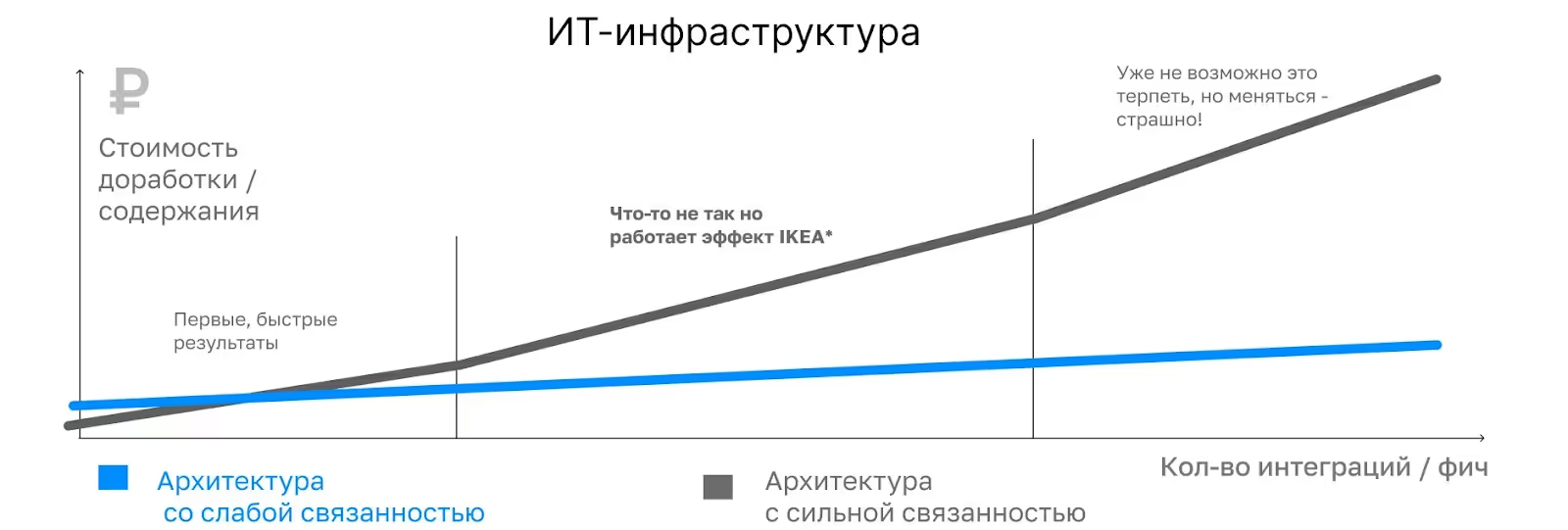

The result is always the same: weak connectivity is not an abstract idea, but a real factor affecting costs. Below is a graph that clearly shows how IT maintenance costs are increasing without weak connectivity.

* The IKEA Effect is a cognitive distortion in which a person overestimates the value of hand-made things, even if they are objectively worse.

We constantly see the same picture: first, integrations are launched quickly, through direct connections, this gives quick results and a sense of efficiency. But over time, the architecture begins to fall apart: each new connection adds fragility, duplication, and dependence on specific systems and people.

Without a single curator who keeps architecture in mind and manages connectivity, the IT landscape turns into a tangled sweater: warm but uncomfortable, overheated and full of holes. It's like a good athlete who trains on his own — he'll make progress. But when an experienced coach appears who sees from the outside, adjusts the technique and manages the load, significant results and records appear.

It is the same in integrations: weak connectivity does not occur by itself; it is achieved through systematic work and architectural support.

There's some good news!

Usually, launching an ESB project does not begin with an attempt to rebuild the entire IT circuit at once, but with the most painful part of the business. For example, if the order department is overloaded, orders are lost, employees drown in manual processing, which is where attention comes first. ESB is building a loosely connected integration between systems: it automates the reception, transformation and transfer of orders. After 2-3 months, what used to be chaos is becoming a stable, scalable flow. And the business sees: “it works!” — without a complete redesign, without global refactoring, simply due to a competent architecture. An island of weak connectivity is emerging — like a safe haven in the turbulent ocean of integrations, where the ship will always find refuge and will not fight side by side with other thirsty people. It will be quickly unloaded and loaded without losing anything, the provisions will be sorted out, and the ship's captain's task is to continue on his own course without being distracted by all this turnover.

If you recognize yourself or your team in this article, I would be happy to hear how you see the solution to such problems. Perhaps you already have an experience to share or an insight that will add another valuable layer to this topic. Write in the comments — we'll discuss it.

ESB — benefits for business management

ESB - advantages for building an IT business outline

{{cta}}